As I always define the benefits of cloud computing in 3 ways

1. Business Strategy (Shared responsibilities between consumer & CSP, fearless Updates & Upgrades, Global collaboration, Technological transformation & ahead in the competition)

2. Technological Flexibility (Infra Scalability, storage options, deployment choices [IaaS, PaaS, SaaS], features and tools)

3. Operational Efficiency (Global accessibility, Infra/App/Data security, agility that speeds to market, CapX to OpeX, OnDemand that pay as you go)

We know the above cloud computing benefits for many years by now, but to my experience being a Cloud Solution Consultant, handled many customers across the globe and below the consolidated list of questions from the C level customer executives that I met. They are all about cloud adoptions and migrations

1. How public cloud is better than On-premise?

2. How complex the migration of our corporate data center to public cloud?

3. Are the current applications deployable to cloud? and how can we assess them?

4. What is the cost for the infra/app migration?

5. What will be the TCO and ROI benefits if we move to the cloud?

6. Is public cloud secured than on-premise?

7. How our competitors using public cloud?

That is why I was engaged? Yes, what I could say to these precious clients is

As we migrate to the cloud with some essential steps which can evaluate the situations and provide a detail which can address the above questionnaire and a road map to the future public cloud establishments.

I always say Initiate, Assess, Approve, Build & Migrate

Initiate: Enterprises have to initiate the plan of moving to Public Cloud as per their desired vision by bringing all the technical/Business stakeholders into one platform and kick start the planned steps (Something like — engage a strategic partner, build a CoE team, acquire the knowledge etc…)

Assess: Do an assessment of current state of infrastructure, applications, data, security & networking of on-premise over public cloud platforms

Approve: Getting the plans approved is typical for any organization. Plan must adhere to the organizational policies, regulations and compliance requirements. Business needs to approve the cloud adoption plan

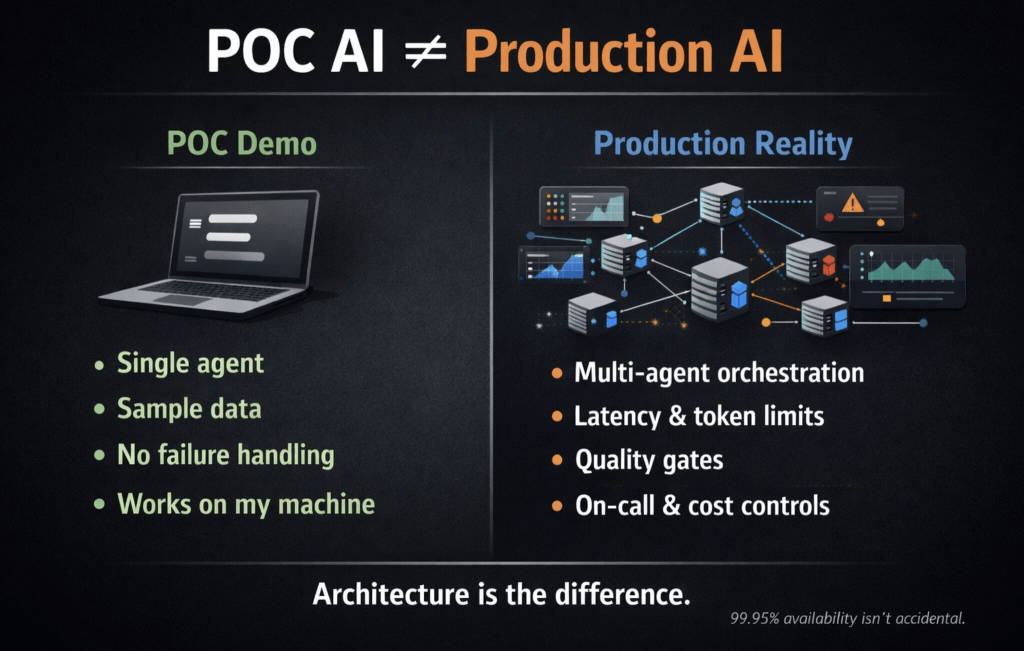

Build: Build a Demo/POC environment in Public Cloud which can support the infra/app/data hosting

Migrate: Move the simple workloads first to the cloud and test the benefits.

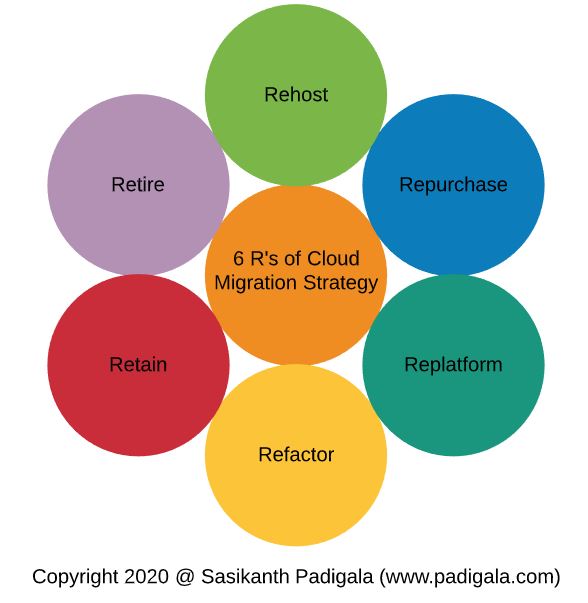

Later leverage the tools & services of particular CSP to build a secure, reliable, efficient cloud platform which can support the 6 Rs (Re-hosting, Re-platforming, Repurchasing, Refactoring, Retire & Retain)

So now I advised my customer the benefits of cloud computing and the strategic steps to take to migrate to the cloud. But what is the approach or method to follow?

That is where the “Minimum Viable Cloud” approach comes to ……

There are many use cases for an organization to move their workloads to Public cloud platform. Under any circumstances or use cases, building a secured public cloud platform which can satisfy the requirements to launch at least one application and engages all the key stakeholders of that organization, where they can explore, experiment that platform to their need….is called the minimum viable cloud environment and the approach is nothing but MVC approach.

1. This cloud platform should demonstrate the viabilities of cloud services

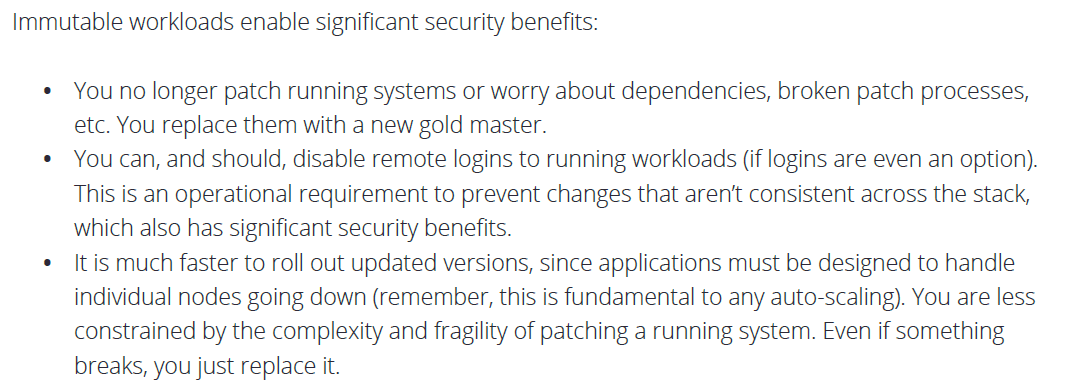

2. This cloud platform should be secured in terms aligning with Cloud security alliance guideline, and ensures the infrastructure/application/Data/Network security

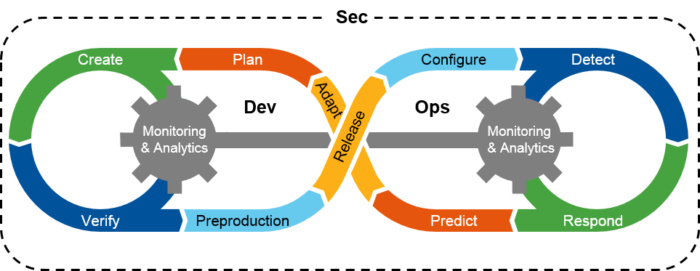

3. This cloud platform may have Agile mindset, Automation, DevSecOps, Ci/CD of Infra/App pipelines, Virtual/Physical connectivity with on-premise data center & extended corporate user access.

During this MVC approach, customer should be ready with the details to satisfy above stated MVC characteristics.

Below few use cases to MVC approach

1. Deploying a web page with high availability and geographical accessibility

2. Re-hosting an enterprise application with logging & monitoring in place

3. Migrating a Sybase DB to Cloud MSSQL

4. Re-engineering a database to host in public cloud with high security and low cost

5. Building a multi environment platform with CI/CD for faster application releases.

MVC approach is a secure and comprehensive way of cloud adoption!!!

The “Certificate of Cloud Security Knowledge (CCSK)” is the first professional certification in Cloud Security Industry released in 2011 and gained the momentum very soon. If you see the top vendor neutral certifications for Cloud Security, then CCSK & CCSP (Certified Cloud Security Professional) stands ahead of other certifications.

The “Certificate of Cloud Security Knowledge (CCSK)” is the first professional certification in Cloud Security Industry released in 2011 and gained the momentum very soon. If you see the top vendor neutral certifications for Cloud Security, then CCSK & CCSP (Certified Cloud Security Professional) stands ahead of other certifications.